This is the text of a keynote address that I gave at the AIIDE conference in Marina Del Rey on June 3rd, 2005

History records several "Golden Ages", astounding times of creative and intellectual achievement One of these was Athens in the 4th century BCE; another was Florence during the Renaissance. In each case, some magical confluence of forces triggered an explosion of ideas in literature, scultpture, science, philosophy, and mathematics.

I believe that, centuries from now (should civilization survive despite itself), historians will look back upon this period as a Golden Age: the Golden Age of Computers. But I suspect that historians will perceive the Golden Age in terms quite different from our own preferences I think that they will see the Golden Age deriving from the flowering of the computer as a medium of artistic expression, not merely a computational device. Our goal in this conference is to consider how we might maximize our contribution to this dawning Golden Age, and I would like to offer some suggestions based on my experiences in interactive storytelling.

My first observation is that this Golden Age will emerge from the confluence of technology and art, and this of course requires a communion between technologists and artists. This communion, I must warn you, will be immensely difficult to achieve, because technologists and artists are fundamentally different creatures. They think differently, and the differences in their thinking are profound.

Those differences are so fundamental that they can be described in terms of neurophysiology. You of course know that neurons are fundamentally pattern-recognizing devices; all nervous systems are designed to accept a pattern of sensory inputs and respond with a pattern of motor outputs. Nervous systems have two major requirements: that they produce some response, any response, and that they do so quickly. We notice a dark shape hunched in a dark alley and think we see a dangerous criminal -- even though it’s just a garbage can.

There’s a completely different way of processing, though, and that got started about 100 million years ago. It has been developing all this time, but perhaps the most striking milestone in this development process was Aristotle’s invention of the syllogism. This, coupled with abstraction, made possible formal sequential reasoning: what we now call logic, which underlies all of mathematics, science, and technology and provides the basis for the military, scientific, technological, and economic success of the West in the last 500 years.

Let’s contrast this sequential reasoning with the pattern-based reasoning used by all nervous systems. The two are very different, but they’re not quite orthogonal. We can get an idea of how different they are by considering the contortions that one system must be put through to emulate the other system.

Consider, for example, the amount of effort that must be expended in order for a sequential processor (a conventional computer) to emulate a nervous system by means of neural net software. This requires thousands of lines of code, megabytes of memory, and millions of machine cycles to function. Yes, a conventional computer can "fake" pattern-based reasoning, but it’s not easy.

In the same way, we can train our pattern-processing minds to "fake" sequential reasoning. The educational process for this starts in early high school. I ask you to harken back to those early years and recall the confusion you experienced in algebra and geometry. What was all this stuff about theorems and proofs? It was certainly a weird and difficult way to think, and you had to really twist your mind around to understand it, but after much effort, you could do it. It takes years of additional effort to truly master this unnatural form of thinking, but people do it and they become valuable experts in our society. But the difficulty we must overcome in doing so indicates just how far apart pattern-based reasoning and sequential reasoning are.

Here’s the problem we face in encouraging the convergence of art and technology: art relies on pattern-based reasoning and technology relies on sequential-based reasoning. These two fields are completely different at the most fundamental level. Is it any wonder that the much-touted "marriage of Silicon Valley and Hollywood" was such a dismal failure? Artists and techies are completely different intellectual species, from different cognitive planets. The first thing you should say when you encounter an artist is to slowly and plainly enunciate, "Take me to your leader."

The contribution I offer today is a set of suggestions on how to handle this problem. My first suggestion is: never, ever underestimate the magnitude of the difference between the artists and the technologists. Minimizing this difference is certain to doom any collaboration between the two species.

Some negative ideas

I would next like to offer some negative suggestions -- things to avoid. I have four:

First, do not commit hubris. This word, "hubris", is an import from Greek, but we didn’t carry over all the connotations of the original term. In the original Greek, hubris was closely connected with the notion of "the natural order of things". There was a proper place for everything and everybody. A man who over-reached his place in the natural order of things was guilty of hubris. It wasn’t so much a sin as a violation of the natural order of things. Also associated with the idea of hubris was the inevitability of a comeuppance. The gods would see to it that every act of hubris eventually resulted in a suitably ironic comeuppance.

We have a good example of hubris from the near-simultaneous release a few summers ago of two computer-animated films: Shrek and Final Fantasy. The former was built in the traditional manner, with writers doing the writing, animators doing the animation, and computer people building the software. The result was a fine product that was a smashing success. Final Fantasy, however, was an act of hubris. A successful computer game designer decided that he could arrogate to himself the authority to write a good story. So he led the project and exercised creative control over every aspect of it. The gods frowned on this act of hubris and punished him for it: Final Fantasy was not a market success.

Do not be guilty of hubris. If you are a techie, stick to your techie responsibilities. Leave the artsy stuff to the artsies.

Second, do not fool yourself into believing that you are a Renaissance man. Yes, Leonardo da Vinci was a genius and an inspiration to us all and we should all aspire to his greatness -- but don’t forget that, in the entire history of humankind, there was only one Leonardo da Vinci. There were a handful of genuine Renaissance men. The odds against your being another Renaissance man should be great enough to overpower even the most egomaniacal of us.

I offer myself as an example of what really happens when you aspire to be a Renaissance man. I was trained as a physicist, and I taught myself programming. I was able, over the years, to design and program more than a dozen computer games, some of them arguably good. But I am no great stuff as a programmer; I use pedestrian programming methods and my code is often a mess. I have to admit it: I’m a half-ass programmer. And as an artist, well, I can make some claims to artistry based on my published games. There are some rather nicely done elements in those games. But again, I have to admit the bitter reality that I can’t hold a candle to a real artist; I’m just a half-assed artist. Now, being a half-assed programmer and a half-assed artist doesn’t make me a Renaissance man -- it makes me a complete ass. So keep my sorry condition in mind whenever you have fantasies of being a Renaissance man. Store those fantasies next to the fantasies about seducing whatever sex goddess strikes your fancy, or saving the world from evil terrorists.

Third, don’t create a collaboration in which the artist is reduced to a back seat driver. Every creative person needs a hands-on relationship with the work. Don’t push the artist away from the computer and require him/her to submit requests to a certified computer user. Do you remember the bad old days of batch jobs at university computer centers? They worked the same way, and it was horridly inefficient.

Fourth, ditch the cliches about "teamwork". Inspirational talk about how we’re all going to respect each other and listen to each other’s ideas and gollygee, just have a lot of fun working together -- these ideas work just fine for salesmen and Wal-Mart greeters, but high-strung prima-donnas like techies and arties just aren’t impressed by inspirational posters.

Some positive ideas

Now for some constructive ideas on how to improve collaboration between artist and techie. The starting point for this discussion is the age-old Crawford’s First Law of Software Design: "Whenever considering the architecture of any piece of software, always ask, what does the user do?" A more specific wording of this law is "What are the verbs?" Remember, we’re not talking about interpassactivity here, we’re talking about interivity, and action is expressed through verbs. Thus, the verb list for any piece of software defines that software completely.

Hence the first step in my proposed procedure for artist/techie collaboration: prepare a list of the verbs. The artist has creative control here, but the techie’s task is not flaccid. The techie has two onerous responsibilities during this process. First, the techie must nag, nag, nag the artist to specify the verbs with precision. For example, suppose that our artist desires a verb for making deals. This is a good idea -- but now the techie must begin the nagging process. Are deals confined to direct exchanges of physical property? (e.g, "my cow for your house") Can deals also include variable values such as money? (e.g., "my cow for four of your shekels") Can deals include services as well as objects? (e.g. "my cow for your hoeing my field") What about temporal considerations; does the artist want deferred deals? (e.g. "my cow tomorrow for your hoeing the field today") This kind of nagging will surely infuriate the artist, but that’s the techie’s job in this case.

A second responsibility for the techie is to filter the verb list for technical feasibility. As the artist spins off all those glorious ideas for new verbs, the techie should have data structures and algorithms running through his/her mind. When the artist suggests a verb such as "transcend to a new plane of existence", the techie should make detailed inquiries into the precise nature of this verb to determine if it is technically feasible.

With a draft verb list, the techie is ready to begin building a model to realie the verb list. This time, the techie is in the driver’s seat; the artist should be kept at arm’s length from these deliberations. The techie retains a responsibility to keep the artist posted as to the implications of the model, both positive and negative, for the verb list. Surely the verb list will suffer from losses during this process, but the techie should be able to balance those losses with some gains.

The techie’s most onerous responsibility, however, is the creation of a development environment for the artist. I found, to my dismay, that my interactive storytelling project required perhaps four times as much effort for the development environment as for the model. With the benefit of hindsight, I realize that this seems obvious. After all, a model merely crunches numbers, but a development environment must present the workings of the model in a manner readily accessible to the artist. The development environment must incorporate every complexity of the model, and on top of that, must provide a variety of means for controlling those complexities.

The techie has complete creative control over the development environment, yet, in this task the techie also plays the role of servant to the artist. This is a particularly difficult role to play, because the artist will be tempted to demand particular features from the techie, who must exercise critical judgment in the fulfillment of those demands. Seldom do artists truly appreciate the best way to organize a development environment. Their needs are real, but their vision of how to fulfill those needs is often flawed. The techie must respect the needs without deferring to the flawed technical judgments of the artists.

Scripting languages

Ultimately, every artist must express him/herself in algorithmic terms, and that in turn requires some kind of programming language. This is a fundamental requirement over which there can be no compromise. Interactivity cannot be defined with data; it is a process and as such can be defined only through processual means: algorithms. The artist who cannot learn self-expression through algorithms can never go far in the interactive medium.

Obviously, we don’t want to impose the outrages of java, C++, or any of the other general-purpose languages upon the artist; such an act would surely be deemed worthy of prosecution by the International Criminal Court as a crime against humanity. We must create something smaller, more manageable, and more specific to the problem at hand. We must create a scripting language, not a programming language. I offer here some guidelines for creating optimal scripting languages for artists.

Logomorphic languages, not alphabetic languages

My first suggestion is that every scripting language should be logomorphic, not alphabetic. To appreciate this suggestion, I must take you back to the days of yore when writing systems were first emerging. All early writing systems were logomorphic: each glyph (symbol) represented an entire word. These systems worked perfectly well in the limited contexts for which writing was required: bills of lading, keeping track of goods, tax receipts, and so forth. For small vocabularies with perhaps a hundred words, logomorphic systems were ideal. Later, as writing was applied to wider applications, the logomorphic approach began to show its limitations and various means of extending them were tried. But the real solution turned out to be the alphabetic system, which used glyphs representing tinier components of language: single sounds rather than entire words. The alphabetic system works quite well with vocabularies of many thousands of words.

Remember, however, that a scripting language does not need, and should not be burdened with thousands of words. It should have a compact, concise vocabulary. Therefore, a logographic system is more appropriate than an alphabetic one. To put it in plain terms: dump the keyboard and use icons.

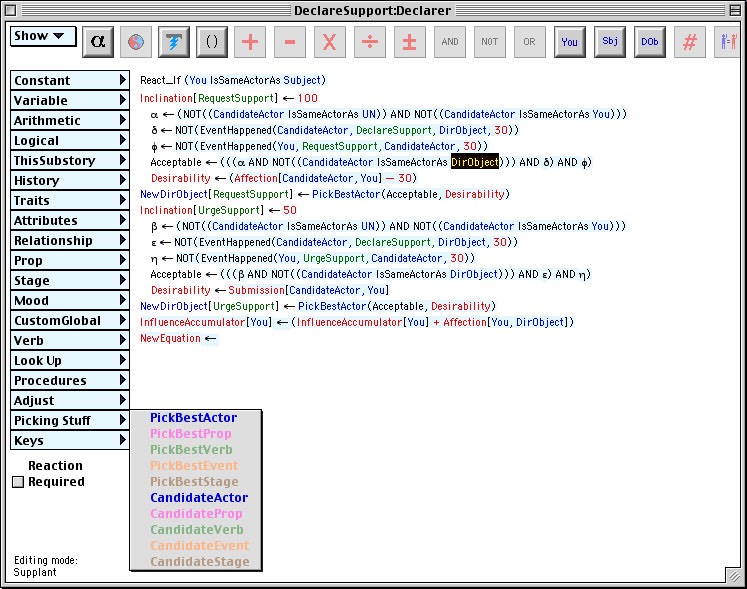

Actually, icons may not be the best solution, as icons are often confusing. I suggest that a scripting language be composed of words. Not constructed-of-letters words, but complete, unitary words selected from menus. Here’s an example of the scripting system in my old Erasmatron interactive storytelling development environment:

Each of the words in this script is obtained from the set of menus along the left edge of the screen. There are nearly 400 different words available in this scripting language. In this image, the word "DirObject" has been selected, and a menu along the left has been opened, showing some of the options available to replace the selected word.

Why is this system superior to an alphabetic one? Think in terms of the user experience. With an alphabetic system, the user must type in keywords, and hope that he has recalled and spelled them correctly. With 400 keywords, that requires considerable memorization from the user. This logographic system makes all the keywords accessible via a tree structure that makes it easy for the user to figure out just where his desired word will lie.

More important, this system never allows syntax errors. A typewritten script is sure to contain misspellings, incorrect arguments, or other syntax errors. This system obviates all syntax errors by making it impossible to select a keyword that is syntactically incorrect. The simple expedient of disabling menu items guarantees syntactic correctness.

No hidden requirements

Another benefit of this logomorphic approach is the elimination of hidden requirements. In general-purpose languages (and many scripting languages), every variable must be declared before it can be used. Every function call must have the correct numer and type of arguments. The user must guess the hidden requirements -- usually unsuccessfully.

With this logographic approach, selecting a function automatically causes it and its requirements to be displayed, like so:

In this example, the function "Benefit" has just been chosen. It provides the user with prompts specifying the four arguments to the function.

Hard data typing

Another useful concept here is hard data typing. Back in the good old days, there was just one data type: a byte. Modern programming languages have abstracted the concept of a data type into an object. Artists don’t need such an abstract concept; I have found that just eight data types cover the needs of interactive storytelling:

Actors

Props

Stages

Numbers

Booleans

Verbs

Events

By color-coding them I make the concept of data typing more digestible to the artist.

Poison

You may wonder how I handle run-time errors. The conventional approach was to let the system crash, slapping the wrist of the programmer. Modern languages allowing trapping facilities that permit general responses to any error. This is too abstract a solution for most artists; I have come up with something that works in interactive storytelling but might not work for other applications: poison.

Whenever a run-time error is generated, my code traps it out, sets a "poison" flag, and terminates further processing of that script. The effect is to simply nullify any options that are poisoned. The modular nature of the scripts in the Erasmatron makes it possible for this strategy to be effective. If a run-time error arises, a particular path in the storyworld is blocked, but other paths remain. The story doesn’t crash; it just gets a bit more narrow.

No flow control

My most suprising recommendation is the elimination of all flow control from scripting languages for artists. Most technical people blink at this recommendation; after all, a program without branching or looping cannot possibly be useful or interesting.

The "catch" is that I don’t really eliminate flow control -- I subsume it into other functions. The simplest manifestation of this is the concept of the "role". We all know what a role is in stories. I extended this concept to make it a bit more abstract. Instead of assigning one role to one actor for the duration of the story, each event has its own set of "micro-roles" (which I nevertheless call "roles".). Each role represents a particular dramatic context in relation to that event. For example, if we have an event "Joe punched Fred", then we might have three roles associated with that event:

1. Punchee: the actor (Fred) who got punched.

2. Big brother of punchee: an actor who is male, kin to DirObject, and strong.

3. Girlfriend of punchee: an actor who is female and has strong affection for DirObject.

Each of these three roles would have different options. The role itself is defined by a set of logical conditions. (e.g., male, kinship, affection). We techies would normally write a set of if-statements to handle this. However, with the Erasmatron scripting system, I merely tell the artist that each role is defined by a boolean expression and any actor who fits that boolean expression will play that role. Yes, that’s still an if-statement, even if it’s cleverly packaged. But the artist need not think in terms of flow control. The concept has been subsumed into a natural part of the artist’s thinking.

I was able to subsume the concept of the loop into a single function that I call "PickBest". Experience showed me that almost all storybuilder need for loops arose when the storybuilder needed the script to select one item from a group, based on some set of requirements. I was able to digest this to a single concept that includes an internal loop, without ever exposing the storybuilder to the loop. The basic format of the PickBest function is:

PickBest(Type, Acceptability, Desirability)

In this function, "Type" is the type of object being picked (Actor, Stage, Prop, etc); "Acceptability" is a boolean expression filtering out inappropriate objects; and "Desirability" is a numeric expression specifying the factors that make one object more desirable than another. The storybuilder fills in the terms and the engine picks the object that meets the requirements. If none meets the requirements, then the script is poisoned.

Artistic terminology

Every profession has its cant. We all need those special terms that more precisely specify concepts particular to our profession. Moreover, fluency with cant is the best external indicator of internal expertise; accordingly, most professionals ladle on the cant. This always creates a problem when two professions attempt to interact; neither can understand the other’s cant.

It should be obvious that techies need to throttle back on the cant when dealing with arties. However, I shall go even further and suggest that, whenever possible, a development environment should be constructed around the terminology of the arties.

Here’s a simple example of how I did this in the Erasmatron. My engine had provision for physical objects that could be manipulated by the actors; in a burst of linguistic creativity, I termed them things. A year later, I changed the term to prop. This entailed no significant changes in the code and instantly improved the accessibility of the model to the storybuilders.

Similar considerations led me to use terms such as actor, stage, role, and rehearsal. In some cases, such as with the term role, the original meaning was modified to suit the special needs of the storytelling model. In other cases, however, I adjusted the model to meet the expectations of the artist.

High-level testing aids

Lastly comes the need to provide the artist with high-level testing facilities. We programmers are usually happy with a debugger; we have developed our bug-sniffing senses to such a degree that we can sniff out a bug with a few cunningly placed breakpoints. But debuggers require a deep feeling for flow control, something I have already declared inappropriate for artists. Instead, we need to provide the artist with higher-level performance tests.

For example, I have an elaborate facility built into the Erasmatron that I call the rehearsal. This facility generates many runs through the storyworld with slightly different initial conditions, and compiles a statistical overview of the results. It keeps track of every poisoning and its circumstances. It generates profiles of activity that show which verbs were heavily used -- and which were seldom used. It monitors loopyboobies (behavior loops into which actors can become mired) and threadkillers (verbs that triggers no responses and so kill off the action).

Generalizations for the future

Much of this discussion has concentrated on my own Erasmatron as the working example. I would now like to offer some broad generalizations for entertainment software in the future:

Increasing verb count

The vocabulary size of games hit a ceiling of about twenty verbs early on; a few games have managed to push higher, but they have appealed to special audiences. The problem lies in the input structures used in games. Direct mapping of verbs onto the screen establishes the ceiling; recourse to single keystrokes adds a handful of additional vocabulary slots, but the ceiling remains low. Games are now hobbled by the limitations of their vocabularies. We need to pierce that ceiling.

I believe that the development of entertainment software for the next few decades will be dominated by efforts to increase the vocabulary size, giving the player a greater range of expressive opportunities. Until we accomplish this, games will necessarily remain puerile in their expressive range.

Greater contextual sensitivity

This increased vocabulary will impose a new constraint upon verb set designers: the requirement to restrict access to verbs to contextually appropriate situations. The typically game relies upon universally applicable verbs. Let’s consider the verb set for the typical shooter:

Turn right

Turn left

Forward

Backward

Go fast

Jump

Duck

Drop

Shoot/employ/use

Change weapons

Note that these verbs are universally available; a player could use any of these verbs at any time. This is dumb. An expanded vocabulary will entail the use of verbs that are only available when certain conditions are satisfied. This contextuality requirement runs afoul of many simple input structures, as there is no clear way to indicate the contextual obviation of a verb to the player. A few attempts have been made to do so with on-screen messages explaining the problem. For example, a player who tries to fire a weapon without ammo might be shown a quick message, "You’re out of ammo!" on the screen. But this solution is too clumsy for general application. More sophisticated contextuality calculations will need to be brought into entertainment software and presented to the player in a more gracious fashion.

Less spatiality

Long have I railed at the obsession with spatiality that grips the mind of every game designer. Out of the thousands of games that have been produced in the last two decades, only a handful of designs lack some kind of internal map. Even text adventures, using no graphic display whatever, still rely on an internal map (which the player attempts to recreate during his peregrinations).

Folks, we’ve got to drop this maniacal obsession with spatial reasoning. The human mind is capable of many kinds of reasoning: spatial, social, linguistic, syllogistic, analogistic, aesthetic, and numeric. There is no reason for our games be so narrowly focussed on this single dimension of human mentation. I predict that one of the major thrusts of future entertainment software will be the expansion into these other realms of challenge.

More linguistics

Horatio Alger advised the ambitious to "Go west, young man!" In like fashion, I advise, "Go linguistic, young designer!" The only way we will obtain greater verb counts, achieve greater contextual sensitivity, and address other dimensions of human cognition is through application of linguistic concepts. I am not arguing for natural language processing -- I fear that will remain beyond our reach during our lifetimes. But we can bring ideas from linguistics into our designs to expand our vocabularies. The "brain-jack" in the back of the head permitting direct connection from a computer to a brain is now a cliche of fiction, but in fact, we humans already have the hardware ports for high-speed communication. Moreover, we have highly developed software protocols that provide maximum data compression, permitting us to transmit and receive large amounts of information in amazingly short times. Those hardware ports are our ears and vocal system; the software protocol is language. I shall leave you with a few examples of highly compressed data transmissions using this protocol:

Where’s the beef?

Ontogeny recapitulates phylogeny

Apples and oranges

Make my day

That’s all, folks!