Humanity has made two gigantic cognitive leaps in its past, and is about to embark on a third.

The First Cognitive Leap...

...was the result of the integration of language into the human mind. All nervous systems are, in essence, pattern-recognizing systems. Any pattern of sensory inputs can be recognized in terms relevant to the creature. Our brains force us to recognize patterns even when we know they’re not there. We cannot help but see dragons, heads, and ships when we look at clouds. Sometimes the faint rustle of wind sounds to us like voices. We impose known patterns on random stimuli.

This is the lowest-level architecture of the mind. At the highest level, the mind is organized into mental modules — portions of the brain dedicated to solving particular common problems. The most pronounced of these mental modules is the visual/spatial mental module. It is very old and accordingly well-localized at the back of our brains (which is why you see stars when you are hit on the back of the head). This mental module arose to handle the complex processing of our visual inputs. Few people recognize how great is the difference between what our eyes sense and what our minds perceive, because the visual/spatial module operates at such a low level of our thinking that we are completely unaware of its workings. Perhaps the best indication of how much processing there is comes from various optical illusions that exploit computational oddities of our visual/spatial module to yield paradoxical results:

The visual/spatial module transforms our two-dimensional image into a three-dimensional representation of the image. You cannot help but see the man’s cheek as curved, or think that the ear is further away from your eye than the nose. Note also that the shortened version of the impossible object on the right does not fool your eye — that’s because less processing is required to assemble the parts into a visual whole.

There are many ways to slice the pie of the human mind into mental modules, and so there’s plenty of room for disagreement as to what the true mental modules are. Nevertheless, there are four mental modules that seem to enjoy broad recognition. The first is the visual/spatial module.

The second is the “natural history” module. It’s a hodgepodge of knowledge and thinking that allows us to understand how the world works. It’s generally structured as a mapping of causes onto effects. It’s the natural history module that tells us that dark clouds portend rain, that hitting one rock with another will cause the target rock to fracture in a certain manner, and so forth. Think of it as “folk science”: not precise, rigorous, or logical like real science, but instead something that is sometimes dismissed as “folklore” or “old wives’ tales”. The natural history module is critical to our survival in an unforgiving environment.

The third is the “social reasoning” module. This allows us to empathize with others, to understand their emotional states, to anticipate their reactions to situations, and even to manipulate them by such anticipation. This is obviously critically important to a species as social as ours.

The fourth is the language module. This huge module allows us to understand language in all its complexity. It consists of an encyclopedia of word meanings and pronunciations, plus a huge grammar analyzer. Again, the importance of this module should be obvious.

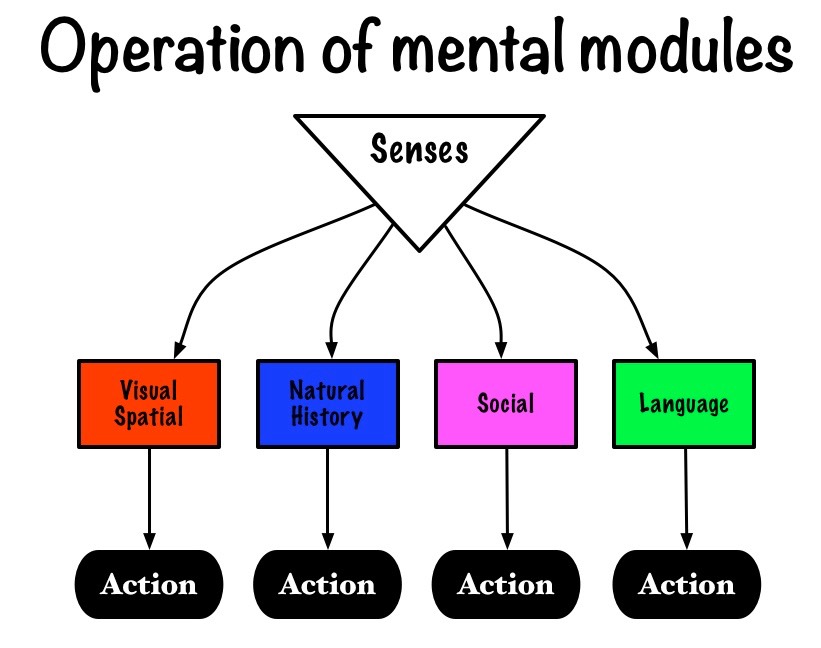

The original high-level architecture of the mind looked something like this:

Patterns of input from the senses would go to each and every mental module, and if that module recognized the pattern, it would jump up and say “That’s MY kind of problem!” and provide the answer, decision, or action appropriate for that pattern of inputs. It’s a very efficient organization, insuring that, no matter what the input, some mental module will handle it. It’s also lightning fast — critical in a real-world environment.

Language Stages a Coup

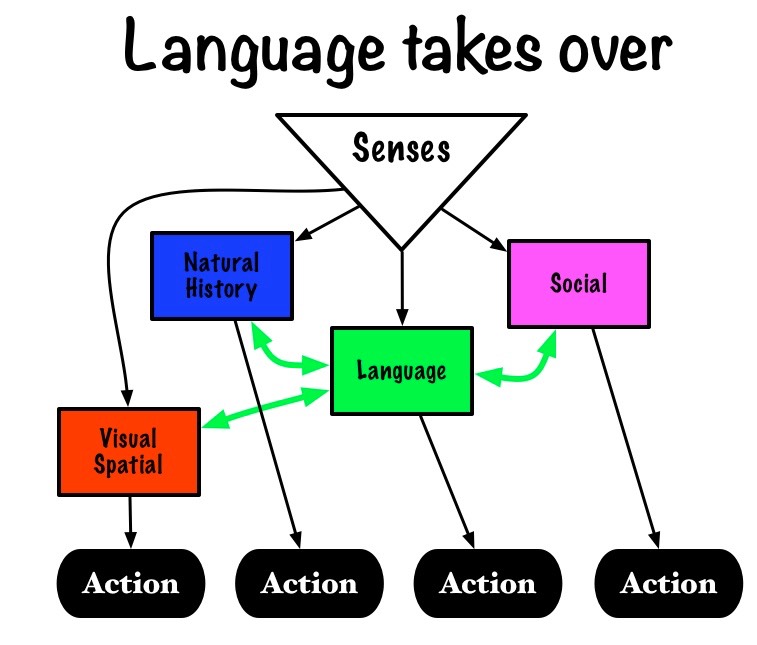

In order for the language module to do its job properly, it had to gain access to each of the other modules. Obviously, it couldn’t talk about the contents of other modules if it didn’t have access to them. (See this essay for more on the process). So language sent its tentacles all over the brain, infiltrating every module with its connections. This gave language a more important role in the operation of the mind, and pretty soon the language module was central to all activity:

I speculate that the language module is now approximated by our sense of self, or ego, or whatever you want to call it. “I” is really the language module. However, the old system was not destroyed; it continued as before. When we react to a situation instantly, we’re relying on our original system; when we ‘look before we leap’, we’re using the language module to integrate the decisions of several modules. We call the old-time pattern-recognizing behavior “emotion”, and treat the newer language-integrated response as superior, largely because it brings more mental resources to bear on the problem.

The mental modules interact

But the connections between the language module and the other modules were bidirectional; this made it possible for the disparate mental modules to interact with each other. This was what triggered the first cognitive leap. Here’s a shortened diagram showing just some of the interactions between the mental modules:

As you can see, the interactions between the mental modules led to lots of new ideas, in roughly this order:

Visual arts: the visual representation of social matters. Cave art, early figurines.

Storytelling: the use of language to teach how to handle social relationships.

Religion: the personification of nature as gods; that is, people who can be manipulated with prayer and sacrifices.

Writing: the visual representation of language

Science: the use of language’s multi-dimensional style to explain natural phenomena.

These were just a few of the results of the integration of the mental modules (for more on this, see this essay). The end result was something that archaeologists call “the Upper Paleolithic Revolution”. Suddenly, cave art appeared; so did a big expansion of the stone tool kit, as well as clothing, nets for catching fish. But the biggest result of the Upper Paleolithic revolution was the discovery of agriculture. That changed everything.

The Agricultural Revolution…

…was the end result of the Upper Paleolithic Revolution. That’s what got civilization started, and is the most important of the many dramatic changes in human history. Thus, the first cognitive leap was the integration of mental modules, which eventually produced civilization.

The Second Cognitive Leap…

…was the direct result of civilization. The early farming communities had a simple structure: they were protection rackets run by a king and his thugs. They’d tell the farmers, “You give us some of your grain and we’ll protect you from the thugs down the road. And if you DON’T give us some of your grain, we’ll kill you.” It was an offer they couldn’t refuse. So cities arose with surrounding farmland. The cities were populated by all the people making luxury stuff for the king and his thugs.

But there was a flaw in this cozy little scheme. For some reason, the fifty bushels of grain sent to the city by a farmer might arrive at the temple as only forty bushels of grain. The kings were shocked and indignant that their people could be so unethical as to embezzle grain. But the problem grew worse and worse, until some genius invented the solution: the bill of lading. This was a record of what was loaded onto the cart, which was presented upon delivery. The the contents of the cart didn’t match the bill of lading, the cart driver was in big trouble.

The original bills of lading were made out of little tokens in various shapes that were encased in a little clay ball that was sealed up by the field administrator, and he put his seal on them to establish their authenticity. Later on, some lazy genius took to just drawing the shapes of the little tokens on the outside of the little clay balls. Thus was writing born. They just wrote the symbols for numerals alongside the symbols for various types of agricultural commodity, put their seal on it, and the job was done.

They followed the famous Microsoft strategy for improving the system: just keep adding more and more features (in this case, new words using new symbols) to meet every need. After a while, their writing system included several thousand unique symbols, all of which had to be memorized in order to read and write using this system.

Needless to say, this didn’t work too well; it took decades to master the written language, and the societies could not afford too many of these highly-trained scribes. Hence scribes spent almost all their time writing bills of lading, messages to far-flung government officials, and so forth, and they didn’t have much time to write about anything else.

Enter the barbarians

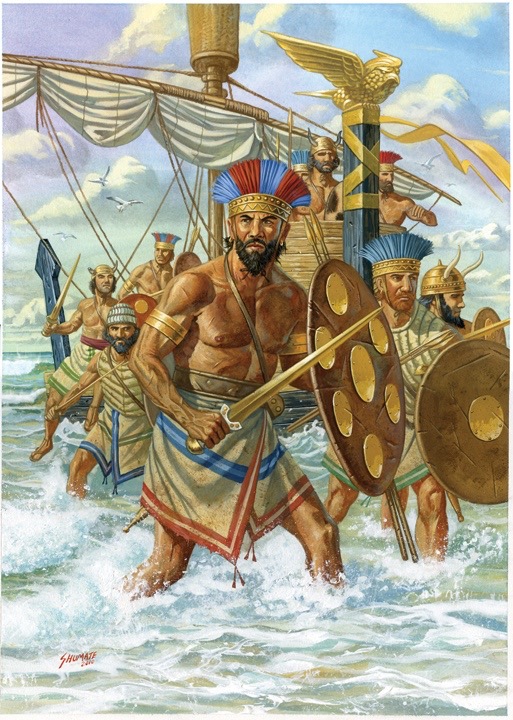

Sometime around 1200 BCE, a bunch of barbarians invaded the Near East. We don’t know much about them; in fact, we know almost NOTHING about them. The Egyptians called them “the sea peoples” and they drew lots of images that permit this speculative picture of what they might have looked like:

Nasty-looking characters, aren’t they? And dig those crazy hats! This is definitely Bronze Age Punk. Anyway, these fellows did the standard barbarian shtick: killing, burning, raping, looting, yada, yada. They destroyed the Hittite Empire; they annihilated the Mycenaen civilization in Greece and Crete; they destroyed just about every city on the coast of the eastern Mediterranean as far south as Egypt. And they gave the Egyptians a real run for their money; some pretty ferocious battles were fought and the Egyptians were still standing at the end of them, so I supposed they are justified in claiming victory. They continued this for at least a hundred years, probably longer. Eventually they disappeared, nobody knows where to. Perhaps they starved to death when there wasn’t any more grain to pillage. And they left behind an almost completely depopulated zone:

The entire highlighted area was left without any permanent human occupation. However, this situation didn’t last long; the eastern areas of this zone were quickly repopulated by colonists from unpillaged areas further east. Perhaps even the Hebrew occupation of Palestine was a response to this situation; after all, why would Pharoah have let them go? You don’t believe that biblical plague nonsense, do you?

But the region around the Aegean Sea was not quickly repopulated:

This entire zone lay desolate, and the reason why is pretty obvious when you look at a modern photograph of one of the islands in the Aegean Sea (Skyros):

Look how barren this land is! See the rocks in the foreground? That’s typical of the landscape in the Aegean: rocky, barren, supporting only bushes and a little grass. You can’t grow crops on this land. And without farming, the only other source of food was in the sea. The problem was, nobody knew how to preserve fish in those days, so you had to eat it pretty quickly after catching it. What happens if you had stormy weather for a week? You had to choose between going without food — in which case you might well starve; or sailing into the sea to fish — in which case you’d probably sink and drown. People were forced to make this kind of choice all the time, and so the population in this region was tiny: just a few little clusters of families on the seashore, eking out a living as best they could. In good years, they expanded; in bad years, little groups simply died out.

Now, they tried to supplement their diets with nuts, roots, and berries, but this didn’t help much, until they discovered two berries that, by themselves weren’t much use. Those were grapes and olives, and they grew just fine in the dry, rocky soils of the region. What was important about these was that you could use the grapes to make wine and the olives to make olive oil, both of which could be stored in big jars and would last for years that way.

The real value of these foodstuffs was not as dietary supplements but instead as trading goods. You see, the thugs who ran the farming-based cities discovered that wine makes you feel nice, and bread dipped in olive oil tastes much better. So they were willing to trade grain for wine and olive oil, at exchange rates extremely advantageous to the fishermen. (We’ll call them Greeks from now on.) A small family operation making just wine and olive oil could generate enough stuff to trade for grain that greatly exceeded their caloric needs. Well-fed women don’t miscarry as often as poorly-fed women. Well-fed children don’t die in childhood with the frequency that ill-fed children die. The net result was a population explosion. The Greek population doubled, and doubled again, and continued doubling for more than 500 years.

Who’s the boss around here? Nobody!

At this point, I must explain an interesting oddity about Greek society. It was scattered all around the Aegean, in little communities on islands and the coastline. At some point, of course, some Boss Thug and his minion thugs would move in and announce that they were taking over the joint. The local Greeks would simply get onto their ships and sail away, leaving Boss Thug and his boys to starve to death because there wasn't anybody to make the wine or olive oil.

Without functioning monarchies, the Greeks needed to develop a system of government that worked even if people got mad and went away. That system was an early form of democracy. It was nowhere near as pure as what we call democracy — it was more a matter of the head of each household having a vote. Still, it laid the groundwork for dramatic results.

Most people long for wealth and power, but you couldn’t take the direct route (using thugs) to power in the Greek world. The best path to power was to accumulate a lot of wealth. The path to wealth was simple: buy low and sell high. The problem was, how do you know what the prices are in the various ports? By this time, the Greeks had set up trading posts all over the Mediterranean and Black Sea, and the prices for grain were wildly variable. One measly barbarian invasion, peasant revolt, drought, or flood could ruin the crops and cause the price of grain in that area to skyrocket; no buying low there. On the other hand, there were always regions in the Mediterranean that had good years and offered lots of grain for a little wine and olive oil. But how could you, a Greek merchant, know which was which? It’s not as if they had email or cellphones back then. The only way to get a message back to home was to send it on a ship, which could take many months to make the trip.

Moreover, just getting letters from distant places quoting prices wasn’t very useful; all your competitors had exactly the same information, and if everybody went charging over to the one port where prices were good, the supply of wine and olive oil would be so large that prices would plummet. That’s no way to get rich.

There was only one way to get a leg up on the competition: anticipate future prices. If you could collect enough market intelligence about every port in the world, you could analyze all that data to figure out what prices would look like six months or a year into the future. But to do this, you needed a LOT of market data.

To do this, Greek merchants set up agents in all the important ports, with instructions to write home about everything and anything that might help an intelligent estimate of future prices. And that’s what the Greek merchant-agents did: they wrote about everything under the sun: what rumors they had heard of political unrest, what kind of clothes people wore, what gods they revered, and on and on.

Uh, how did they do that?

At this point, it behooves me to interject another consideration: literacy. You can’t be much of a merchant if you can’t read all those letters. And you can’t be a good agent if you can’t write. Thus, literacy acted as the gatekeeper to power. If you were young and ambitious, you learned to read and write. This led to something remarkable: the first society in which a goodly portion of society (or, at least, everybody who was anybody) was literate.

The Greeks were able to achieve high levels of literacy because they had two huge advantages over the Mesopotamians who invented writing. First, they had an alphabet, which they got from the Phoenicians. It’s a lot easier to learn a few dozen letters in an alphabet than a few thousand symbols for words. And spelling was nowhere near as difficult as modern English spelling is, because they actually spelled phonetically — what a concept! Once you learned the sound that each letter made, you knew how to read and write.

Their second advantage was that they used papyrus (paper) rather than clay tablets. This made it much easier to write and send long letters. Again, they didn’t invent this; they just stole the idea from the Egyptians.

Putting the pieces together

So now we have all these Greeks sending letters back and forth, trying to figure out everything about everything so that they could anticipate market prices so that they could grow rich and powerful. This sank deeply into Greek culture that, and thus was born rationalism. Kings and pharaohs and priests didn’t have to know the truth. They could just make up whatever “truth” they wanted and if anybody contradicted them, it was “Off with their head!”

But the Greeks couldn’t indulge in such fantasies. They were up against hard reality, and any deviation from reasonable thinking inevitably led to mistakes and poverty. So rationalism became an obsession with the Greeks, and because different people can reason out different ideas in different ways, they developed a new concept: argumentation. This in turn led to a new desirable skill: rhetorical ability. The power to sway a crowd with eloquence and reason came in very handy in the semi-democratic Greek system.

This was a broad cultural evolution, not the result of some Greek Einstein coming up with these ideas out of the blue. However, there were three men who provide us a personal focus of the most crucial step in this process: Socrates, Plato, and Aristotle.

Socrates…

…took rationalism to its acme. He constructed arguments of depth and intricacy that challenged widely-held beliefs. Again and again, Socrates was able to demonstrate that what seemed reasonable was in fact wrong. Interestingly, Socrates refused to put his thoughts down on paper. He felt that the written word was useless because you can’t interact with it. He considered truth to be so complex, so intricate that its depths could be plumbed only by an interaction between two penetrating minds. The truth could not survive the process of paring down to skeletal form on the page.

Plato…

…was one of Socrates’ most devoted students. Despite Socrates’ contempt for the written word, Plato felt that it was important that he record Socrates’ thought for posterity, so he wrote down everything he could remember of Socrates’ teachings. These are the primary source for our knowledge of Socrates. Plato also wrote a great deal more of his own ideas.

Aristotle…

…was one of Plato’s students. Aristotle dutifully studied Plato’s records of Socrates’ conversations, and he was diligent enough in these studies to make a profound, world-changing discovery: Socrates contradicted himself. It wasn’t obvious — other people reading the same material didn’t notice anything wrong. The contradictions were subtle and widely separated. On page 19 Socrates would use a word in one sense and on page 38 he’d use the same word in a different sense — and that difference in sense lay at the core of his argument. Some of Socrates’ most surprising conclusions were wrong!

What’s important here is not that Socrates was wrong, but that Aristotle had made a discovery with much broader implications. That discovery was that writing provided a kind of accounting system for rationalism. You could read through an argument step by step, comparing each statement with every other statement, and verify that they were all consistent — or expose any inconsistencies. In other words, Aristotle discovered that writing was a navigational tool for rationalism.

But he didn’t stop there. He went on to invent the syllogism:

All men are mortal.

Socrates is a man.

Therefore, Socrates is mortal.

He showed that, so long as all your premises were correct and your logic was valid, you could extend this chain for as far as you wanted, and still be absolutely certain that your final conclusion was correct.

Thus, Aristotle ascended to a higher plane than rationalism: logic. This opened up all sorts of exciting possibilities, but about that time Greece was conquered by Macedonia and the creative fire in Greek civilization began to go out. The Romans admired Greek civilization, but did little to advance ideas. Then came the barbarians and the Dark Ages, and so it wasn’t until Thomas Aquinas came along around 1200 CE that the spark was renewed. He stole a great idea from a Jewish philosopher named Maimonides, who in turn had stolen it from a Muslim philosopher named Averroes. The motivation for the idea was the difficulty each religion was having in resolving theological disputes. Theologians everywhere were at each other’s throats, disputing all sorts of esoteric issues. Inasmuch as religion was a crucial componet of the structure of these societies, theological disputes had political implications, and there was always a certain amount of blood being shed because of these theological disputes. Averroes realized that all those disputes could be finally resolved by applying Aristotelian logic to theology. Sadly, other Islamic philosophers were intimidated by the sheer volume of Aristotle’s writings, and they didn’t want to have to study that huge literature to understand theology, so they rejected Averroes’ proposal. The same thing happened to Maimonides. But Thomas Aquinas helped matters along by including a good explanation of Aristotelian logic in his grand opus, the Summa Theologica. Theologians could get a solid grounding in Aristotelian logic just by reading that book, so the resistance to Aquinas’ ideas wasn’t so strong. After the initial theological inertia, Christendom embraced the idea.

All the universities in those days concentrated primarily on educating the upper intellectual echelons of the priesthood, so over the next few centuries an entire class of people well-versed in Aristotelian logic arose. They never realized Aquinas’ goal of settling all theological disputes; indeed, in some ways they made matters worse by convincing each of the factions that they were absolutely, totally correct. But a side effect of all this came in 1543 when the Polish priest Nicolas Copernicus published a book arguing in favor of the heliocentric model of the solar system: the sun is the center of the solar system, not the earth. Copernicus’ huge insight was to introduce numerical logic into the process. Pure Aristotelian logic is essentially boolean: it tells us that a given statement is either true, false, or indeterminate. Copernicus stayed within that framework, but he used numerical calculations to establish the truth of the heliocentric model.

His book didn’t cause much of a ruckus because all its calculations were too much for most people to understand. An elite group did understand it, but they were too small to threaten the dominance of the Christian church, and besides, they were priests anyway and didn’t want to rock the boat. They treated it all as a kind of imaginery vision of the universe, parallel and superior to reality, but not the same.

But 70 years later came Galileo, and this guy was definitely a rabble-rouser. He rocked the boat so fiercely that the Church had to shut him up. But his work, along with that of Johannes Kepler and Tycho Brahe, provided the foundation on which Isaac Newton built physics. Newton’s big idea was to replace Copernicus’ numeric approach with a purely mathematical approach. Where Copernicus had shown that the calculations worked only in a heliocentric system, Newton established the fundamental laws underlying the motions of the planets and just about everything else as well.

Thus, Newton ascended to a higher plane than logic: science.

That led to the Industrial Revolution and our current civilization.

The Third Cognitive Leap…

…has been set up for us but is only in the earliest stages. It’s initial trigger is, believe it or not, games. Now, games have been with us for millenia, but the computer has made it possible to deliver games of such power that a large portion of the population spends a lot of time with games and is imbuing itself with a kind of thinking that is necessary to win: subjunctive logic.

Subjunctive logic is no more than the use of if-then relationships. Where deductive logic attempts to determine the one true answer, subjunctive logic tries to determine the many possible answers. Where deductive logic uses a number of premises to converge on a single answer, subjunctive logic starts with a single situation and diverges through a tree of possibilities to a number of possible answers.

People have always done this kind of thinking, but only in a primitive fashion. The situation today with subjunctive thinking is analogous to the situation in past centuries with rationalism and logic. Five hundred years ago, most people believed in all sorts of superstitious nonsense: magic, demons possessing people, witches having sex with the devil, etc. Nowadays we all dismiss these ideas with a wave of our hands: it’s not logical. Our culture has bred logical thinking into us. Not terribly well, considering the political idiocies we’re still engaging in. But the concept of rationalism and logic has now seeped so deeply into our culture that we’re definitely more rational than people just a few centuries ago.

In the same way, our skills at subjunctive thinking are weak. For example, consider the common tendency to blame oneself for a tragedy to which we contributed a critical step. “If only I had left work ten minutes’ earlier, the house would not have burned down.” In strict Aristotelian logic, that’s true:

It was an exceptionally cold day.

The heater ran all day.

There was a corroded wiring connection in the circuit.

The corroded wiring became hot enough to ignite paper.

Years ago, a bit of paper from above had fallen onto the corroded wiring.

The corroded wiring ignited the bit of paper.

In the hot airspace around the corroded wire, the fire spread.

The fire spread throughout the house.

I left work at the normal time.

When I arrived home, the fire was already strong.

I called the fire department.

By the time they arrived, it was too late.

The house burned down.

It is logical to observe that, had the self-blaming person arrived home ten minutes’ earlier, he could have called the fire department and saved the house. Therefore, he blames himself. This is correct deductive logic, but it’s not good subjunctive logic. Let’s think about this sequence of events subjunctively:

Probability Event

low It was an exceptionally cold day.

high The heater ran all day.

low There was a corroded wiring connection in the circuit.

low The corroded wiring became hot enough to ignite paper.

very low Years ago, a bit of paper from above had fallen onto the corroded wiring.

high The corroded wiring ignited the bit of paper.

high In the hot airspace around the corroded wire, the fire spread.

high The fire spread throughout the house.

high I left work at the normal time.

high When I arrived home, the fire was already strong.

high I called the fire department.

high By the time they arrived, it was too late.

high The house burned down.

A subjunctive thinker would have zeroed in on the four unlikely events and assigned most of the causality for the tragedy to those four events. Assigning causality to an event that is entirely normal and expected is not subjunctively logical. But we still blame ourselves if by some chance we could have taken an exceptional action that would have averted the tragedy.

An even better example of our incompetence at subjunctive thinking is provided by Shroedinger’s Cat, a paradox in which a cat could be either alive or dead. This violates common sense, which does not incorporate subjunctive thinking. I believe that a person competent in subjunctive logic would have no problem with Schroedinger’s Cat, although I myself am not that person.

The role of games

Choice is the essence of game-play. Sid Meier’s definition of a good game singles out the principle: a good game presents us with a series of interesting choices. Game players make a plethora of decisions while playing, and each of those decisions requires subjunctive logic. If I go to the left, what might I encounter? If I hunker down here, will I be safer? Or perhaps I should run as fast as I can straight ahead — what might be the result of that? By using subjunctive logic over and over, people are exercising their subjunctive logic muscles and developing greater skill at it. And if you have a large community of people becoming adept at subjunctive logic, they’ll use it in other situations, and the style of thinking will spread throughout the culture.

This is a slow process. Just as it took centuries for rationalism to take hold in Greece, and millenia for its ramifications to be fully developed, the spread of subjunctive logic will necessarily be slow.

But wait! There’s more!

An even stronger influence comes from the millions of eager young kids who want to design games. These kids learn programming so that they can build their own games. They try to get jobs in the games industry, but there are far fewer jobs than aspirants, so they end up spreading out through society, taking their programming skills with them. Adding to their numbers are the zillions of web designers who learn programming skills.

The employment advantages enjoyed by people who can program will make programming literacy important to everybody’s career. Truck drivers will be expected to be able to write short programs for handling their rigs. People will communicate subjunctively, like this proposed compensation plan:

Total compensation = Salary + 0.75 x Health Benefits + $100 x (vacation days)^2

This gives the employee a broad choice of compensation schedules while protecting the interests of the employer.

Everybody learns to program? Yeah, right!

I’m sure that the notion of universal programming skills seems naive to most people. It takes years of practice to learn how to program. Besides, programming requires a level of intelligence higher than the average. This is simply not possible, right?

This argument overlooks two crucial factors. First, Greek literacy arose because the Greeks grabbed onto the alphabet, which made literacy much easier than the old hieroglyphic styles. I think that we’ll see much the same thing thing with programming. Our programming languages right now are the intellectual analog of hieroglyphics. Our modern-day “scribes” (programmers) spend years mastering the art. It is complicated and difficult. There is a desperate need for a programming system that is within the ken of the average person. I believe that we will develop such a system, but not anytime soon.

The second crucial factor is mass education. Imagine yourself as an ancient Egyptian scribe teleported to a school in, say, modern Kenya. You see all these poor children learning to read and write. They’re not doing it to become professional scribes; they’re doing it as part of what is expected of a citizen of a modern civilization.

Each of the cognitive leaps has imposed an educational imperative on the population. The first cognitive leap, based on the integration of mental modules, was predicated on the mastery of language. It takes a child seven to ten years to learn his language. The second cognitive leap is founded in literacy, and it takes a child another five to seven years to learn to read and write. Thus, the modern citizen is expected to remain in school until something like age 15. We have already established that, as civilization progresses, higher levels of education are required of its members. Why shouldn’t everybody be required to learn to program, even if it means extending their schooling by two years?

Higher levels of subjectivity

We are currently at the very outset of the third cognitive leap; most people are still pretty weak in their subjunctive logic. We will need to advance in much the same sequence that we saw with deductive logic. First, we’ll need to formalize subjunctive logic. We’ve already done that in the form of programming languages, but only a few people can program. We’re roughly where the Greeks were shortly after Aristotle.

The next step will be the quantification of subjunctive logic: using numbers as well as simple booleans. This is the step that Copernicus took with deductive logic, and we’re still far from handling subjunctive logic with numbers (probabilities). Few people think in probabilistic terms, much less quantitative probabilistic terms. That’s the next big hurdle.

After that, we’ll need Newton’s step: using mathematics in our subjunctive logic, substituting algorithms for numbers in our thinking. We’re a long way from that.

The consequences

What will subjunctive logic do for us? It is impossible to know. Imagine a Greek philosopher a hundred years after Aristotle. He understands deductive logic, just as our programmers understand subjunctive logic today. Do you really think that this Greek philosopher could comprehend computers, orbiting satellites, or computer games? We’re wearing the same shoes when it comes to imagining the consequences of subjunctive logic.

Endnote: for more details on the history of thinking, see my multipage essay on the subject.